From marathons to combat competitions, how far is the singularity moment of humanoid robots in the education industry?

In 2025, humanoid robots and Embodied AI are transitioning from laboratories to large-scale implementation, emerging as the focal point of global technological and capital competition. From Tesla Optimus’ factory “007” to Unitree Robotics’ “rental commercial performance ecosystem”, from Qingdao’s rehabilitation robots for “unmanned housekeeping” to Dobot Atom’s “family breakfast assistant”, this industrial transformation driven by AI large models and hardware revolution is reshaping the production and lifestyle of human society.

Have you ever imagined robots with human-like appearance and intelligence walking freely in our lives? These beings, known as humanoid robots, are the crystallization of cutting-edge technologies integrating artificial intelligence, high-end manufacturing, new materials, etc. According to the Guidelines for the Innovative Development of Humanoid Robots issued by the Ministry of Industry and Information Technology, humanoid robots are regarded as another revolutionary product following computers, smartphones, and new energy vehicles.

Different from other traditional robots, humanoid robots not only have a more human-like appearance but also gradually possess autonomous thinking and motor control capabilities close to humans. They are composed of a “trunk” that simulates the human body structure (including bipedal legs, arms, head, and their connecting parts), a “brain” with cognitive intelligence, and a “cerebellum” that ensures dexterous and smooth movements. Their human-like appearance makes them more affable in human-robot interaction, more in line with the “human-centered” design concept of intelligent robots, and more suitable for human-shaped spaces requiring hand-foot operations.

It is estimated that the current global market size of humanoid robots has reached 3.28 billion US dollars, and it is predicted that the industrial scale in China will exceed 20 billion yuan within the next three years. Meanwhile, the proportion of humanoid robots in all application forms is expected to exceed 20%. Driven by a series of policies, investments, and market applications, humanoid robots are ushering in a new intelligent era.

1.The Development History of Humanoid Robots

How did these humanoid robots, which can walk and think like humans and even express emotions in the future, evolve step by step?

Early Exploration (1969–2000): Mechanical Imitation

The story of humanoid robots began in the late 1960s. Limited by the computing power and sensing technologies of the time, humanoid robots in this period could only perform simple repetitive tasks or pre-programmed operations.

Integrated Development (2000–2015): Emergence of Perceptual Capabilities

In the new century, humanoid robots have entered a stage of highly integrated development. With the advancement of sensor technologies, such as the application of visual, force, and auditory sensors, robots began to possess basic environmental perception capabilities and could execute complex tasks through more advanced control algorithms. However, true intelligence was still constrained by the complexity of algorithms and computing power.

Dynamic and Intelligent Advancement (2015–2022): Motion and Cognition

The initial application of deep learning and reinforcement learning marked a technological breakthrough in this stage. Although humanoid robots have made significant progress in motor capabilities, they still need improvement in autonomous environmental perception and real-time decision-making.

Explosive Growth Period (2022–Present): Marching Toward Embodied AI

With the development of large-scale AI models and the iteration of high-performance computing platforms, the new generation of robots has made significant progress in natural language processing, emotional recognition, and complex task execution. The deep integration of software and hardware has ushered in a new era of embodied intelligent agents.

Embodied intelligence emphasizes that robots interact with the environment through physical forms, perceiving, decision-making, and executing tasks in real time. When combined with large models, it is like installing a “smart brain” for robots.

Most traditional AI relies on symbolic processing and static inputs, while embodied intelligence emphasizes that robots interact with the environment through physical forms, perceiving, decision-making, and executing tasks in real time. With the help of models such as deep learning and reinforcement learning, robots can continuously learn and adapt from actual physical experiences.

For example, the “Great Wall” series model “WALL-A” developed by Independent Variable Robots not only integrates visual, language, and action signals but also achieves zero-shot generalization in unseen new scenarios to complete complex tasks.

Large-scale models provide “underlying intelligence” for Embodied AI, which can be divided into two categories:

General large models, as large-scale pre-trained models covering multiple scenarios and tasks, possess high adaptability and transferability, capable of providing fundamental intelligent support for humanoid robots.

Vertical large models focus on customized training for specific domains to enhance efficiency and precision in professional skill tasks. For example, Star Era has launched the general-purpose humanoid robot STAR1, equipped with the native large model ERA-42. It completes precise operations through the dexterous hand XHAND1 (12 degrees of freedom), such as electronic component assembly and medical assistance.

2.Industrial Chain Layout of Humanoid Robots

If humanoid robots are seen as important representatives of the future intelligent society, then their “brain”, “cerebellum”, and “body” serve as the three core pillars to realize this vision. With continuous breakthroughs in artificial intelligence, perception technologies, and precision manufacturing, modern humanoid robots are no longer simple execution tools but intelligent agents capable of sensing, decision-making, and action.

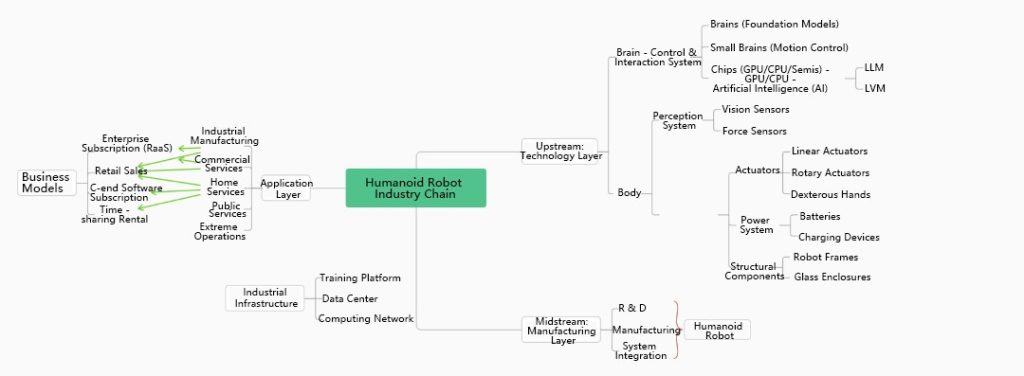

The industrial chain of humanoid robots has taken shape with a three-tier framework: “upstream core technologies—midstream complete machine manufacturing—downstream application scenarios”, demonstrating a development trend of interdisciplinary technology integration and cross-industry collaboration.

The industrial chain of humanoid robots has initially formed a three-tier structure of “upstream core technologies—midstream complete machine manufacturing—downstream application scenarios”, demonstrating a development trend of multi-technology integration and cross-enterprise collaboration.

Upstream: Core Technologies

(1) Brain: The “Silicon Nervous System” of Embodied AI

The core of Embodied AI lies in endowing robots with the closed-loop capability of “perception-reasoning-action”, which relies on multimodal large models (the “brain”) and motor control algorithms (the “cerebellum model”).

As the “intellectual core” of humanoid robots, the “brain” undertakes complex functions such as environmental perception, task understanding, high-level decision-making, and emotional interaction. Integrating high-performance computing platforms with cutting-edge algorithms, it processes data streams from various sensors—visual, auditory, tactile, etc.—to achieve precise cognition of the surrounding world.

In recent years, the introduction of multimodal large models has significantly enhanced the intelligence level of humanoid robots:

•Perceptual Ability: With visual perception large models, robots can complete precise segmentation of complex scenes, object recognition, and even autonomously learn tasks like navigation, obstacle avoidance, and grasping deformable objects with limited data.

•Imaginative Ability: Through technologies such as diffusion models and Generative Adversarial Networks (GANs), robots have gained the capacity for artistic creation and realistic simulation. They can not only generate images, music, and text but also predict physical phenomena and environmental interactions.

•Decision-Making Ability: The application of large language models has endowed robots with logical reasoning, language comprehension, and task planning capabilities, significantly improving their adaptability and flexibility in dynamic environments.

•Emotional Ability: Robots can now recognize and respond to human emotions, providing a more humanized interactive experience. For example, companion robots designed for autistic children can conduct personalized conversations and emotional guidance.

In essence, the upgrading of the “brain” has propelled humanoid robots into an intelligent era of “thought and emotion”.

For example, Google’s Gemini multimodal model, combined with RT control algorithms, has achieved generalization capabilities for 3D physical structures and motion trajectories; Figure AI’s Helix model integrates perception, language understanding, learning, control, and collaboration through an end-to-end learning framework. However, current technologies still face the problem of scarce “cerebellum” training data.

At the hardware level, NVIDIA’s Omniverse platform provides a simulation training environment, while Tesla’s Dojo supercomputer has realized a cloud-based “physical world closed loop”. The integration of these technologies enables robots to rapidly iterate and learn through virtual environments, thus shortening the development cycle for actual deployment.

(2)Cerebellum: The Key to Motion Coordination, Making Robots More Human-Like

If the “brain” is responsible for “thinking,” the “cerebellum” handles “doing.” It serves as the core system translating abstract instructions into concrete actions. Early humanoid robots featured stiff movements and were prone to imbalance, but today, bolstered by reinforcement learning and imitation learning technologies, they execute smooth, stable, and rhythmic motions.

Modern “cerebellums” not only enable general task execution but also facilitate rapid adaptation to new movements across diverse environments—walking, running, jumping, climbing—empowering humanoid robots to tackle challenges with ease in homes, factories, and outdoor settings.

(3)Body: The “Iron Muscles” of Bionic Systems

A humanoid robot’s body constitutes a high-tech bionic system, comprising core components: actuators (motors/joints), sensors (tactile/vision), and energy systems (batteries/endurance). Historically, the global actuator market was dominated by firms like Germany’s Schaeffler and Japan’s NSK, but the rise of Chinese enterprises is reshaping this landscape. For instance, CATL’s battery technology, Robosense’s lidar, and domestic actuators’ cost-effectiveness and technological breakthroughs have positioned China as a pivotal player in the global supply chain.

“Iron muscles” represent the most tangible component of humanoid robots and the linchpin for precise operations. Early limb structures suffered from insufficient power and limited degrees of freedom, restricting application scenarios. However, advancements in materials science, drive systems, and modular design have endowed next-generation humanoid robots with “hands and feet” that are more flexible, durable, and even surpass human capabilities in certain aspects.

High-precision joint control, enhanced load capacity, and lightweight design empower humanoid robots to perform industrial tasks such as screwing, handling, and assembly, while also excelling in scenarios demanding greater flexibility, including service, healthcare, and education.

For example, Unitree Robotics has attracted numerous academic researchers and developers to its ecosystem by demonstrating hardware stability through “pre-recorded motion” tests. This strategy highlights that hardware manufacturers must validate platform reliability through tangible demonstrations to build confidence among algorithm developers.

Midstream: Development Status of Humanoid Robots in China

China’s humanoid robot industry evolves through a unique “double-track” model, where innovative enterprises and universities develop in parallel. The continuous emergence of innovative companies, coupled with precise policy support, has jointly promoted the development of collaborative R&D platforms such as the National-Local Joint Embodied Intelligence Robot Innovation Center and Shanghai Humanoid Robot Innovation Center. These platforms accelerate technological innovation and industrialization through strong alliances. For instance, UBtech launched the Walker series in 2018 and released the new-generation industrial humanoid robot Walker S1 in 2024, focusing on breakthroughs in industrial applications. In early 2025, Zhiyuan Robotics achieved mass production of the 1,000th general-purpose embodied robot, marking the further unleashing of its application potential in manufacturing, logistics, and other fields.

Downstream: Commercial Applications

From the perspective of downstream applications, the use cases of humanoid robots are expanding from industrial manufacturing to home services.

In industrial scenarios, robots have partially replaced humans in high-risk operations and flexible production tasks (such as factory sorting). For example, Figure AI’s logistics center sorting robots operate 24/7, far exceeding human efficiency.

Home environments require robots to navigate complex and fragile settings. From basic housework (cleaning, cooking) to complex tasks (childcare, dog walking), robots need exceptional generalization capabilities. Mainstream scientists estimate that humanoid robots will take at least 5–10 years to truly enter households.

The booming rental market for Unitree humanoid robots exemplifies a necessary step in their technical validation phase. Through leasing, Unitree has achieved the following:

•Rapid market demand validation and hardware capability endorsement: By leveraging the “performative attributes” of robots in rental scenarios, Unitree demonstrates hardware motion control capabilities (high-precision joint control, dynamic balance algorithms), attracting developers to design industry-specific movements and driving the upgrade from “showcase leasing” to “functional leasing”.

•Lowered user barriers: The rental model avoids the high costs of one-time purchases (official prices range from ¥99,000 to ¥650,000), expanding the user base and paving the way for future monetization of software services (action development kits, scenario customization).

Future Business Models of Humanoid Robots

The business models of humanoid robots will evolve from pure hardware sales/leasing to “hardware+service” composite models. In the B2B market, enterprises may adopt SaaS-like subscription models—”Robots as a Service”—such as task-based payments (e.g., per-unit fees for factory welding) or service subscriptions (similar to Office 365). The core logic of this model is to reduce costs and enhance efficiency for enterprises.

In the consumer market, a hybrid model of hardware outright purchase (basic version) plus software subscription (advanced features) will become mainstream. For example, users might buy a home robot for $10,000 and unlock cooking, education, and other modules through subscriptions. The potential of robot app stores (similar to Apple Store) is enormous—developers could sell skill packs, and a revenue-sharing model may drive hundreds of millions in transactions.

As a result, competition in the humanoid robot industry may gradually enter an “ecosystem war” phase:

•Tesla leverages its FSD autonomous driving technology for robot migration, building full-stack capabilities in “hardware+AI+manufacturing”.

•NVIDIA provides tool-based ecosystems (chips+simulation platforms).

•Unitree Robotics preempt service scenario entry points through standardized hardware platforms and developer-friendly ecosystems.

3.Application Stages and Performance of Humanoid Robots in Educational Scenarios

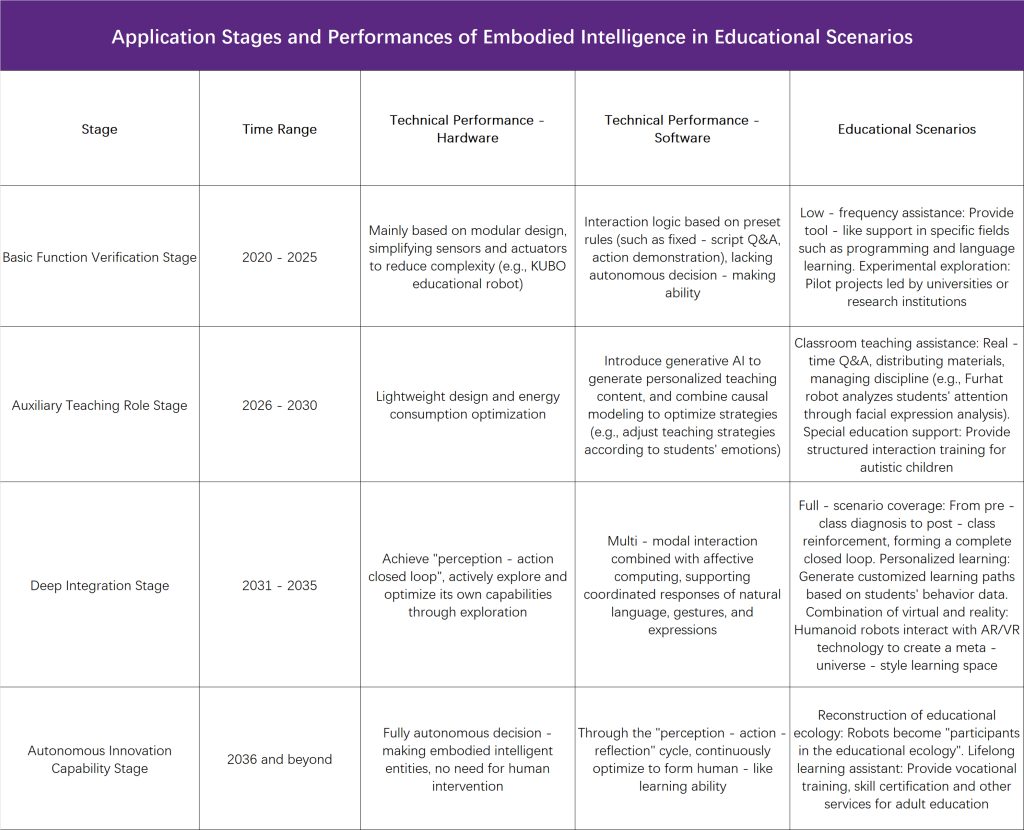

Development Stages of Humanoid Robots in Educational Scenarios

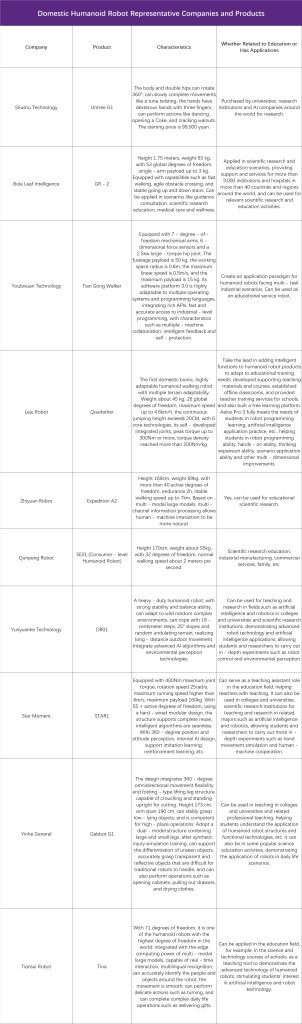

Based on the structural characteristics of humanoid robots, they are expected to first replace humans in special fields, achieve demonstrative applications in industry, and integrate into service industries such as home care and healthcare for large-scale civilian use upon reaching higher maturity.

In healthcare, humanoid robots can assist patients in rehabilitation training by imitating human movements, such as limb function recovery and gait correction. They adjust training programs in real time according to patients’ progress, improving rehabilitation efficiency.

In education and training, the mainstream view holds that current embodied intelligence applications in education remain in the “small-scale scenario validation” stage, suitable for exploration in specific fields (e.g., special education, STEAM practice, vocational education, and university research). However, large-scale popularization awaits hardware cost reduction and algorithmic breakthroughs. Applications in education will undergo a gradual process from “functional validation” to “deep integration”.

Future value depends on the pace of technological breakthroughs and the precision of scenario adaptation. If key technologies like perception-action loops and emotional computing are solved, embodied intelligence may reshape the education ecosystem. In the short term, it needs to complement traditional intelligent devices, serving more as a “tool-based supplement” rather than a disruptor of traditional education models. In the long run, overcoming bottlenecks like hardware costs and emotional computing could make it a key driver of “human-machine collaborative education”.

Development Stages: Technological Maturity and Scenario Requirements

Compared with traditional intelligent devices, Embodied AI offers synchronized responses through movements, expressions, and speech, making its interaction style closer to that of human teachers than tablets or intelligent learning machines. Additionally, it adapts better to physical environments and can perform real-world tasks (such as physical experiment operations), compensating for the “virtualization” limitations of traditional devices. However, current technologies still face challenges: high costs, relatively high failure rates, and insufficient emotional depth—while they can simulate emotions, they cannot replace the empathic ability of human teachers.

Application of Humanoid Robots in Vocational Education and K12 Education

In the next 5 years, existing intelligent learning machines and platforms can meet most basic needs at a cost far lower than embodied intelligence. In the long term, with the decline of technical costs and algorithmic breakthroughs, embodied intelligence may become the core component of “human-machine collaboration”, shifting from “tool assistance” to “ecosystem reconstruction”. Robots will not only serve as teaching tools but also become educational participants (such as virtual tutors in metaverse classrooms), and real needs will emerge.

From the perspective of specific educational market segments, take vocational education and K12 education as examples to see the application of humanoid robots.

Vocational Education: Skill Practice and Industrial Scenario Adaptation

•Core Needs: Cultivate practical skills matching industrial demands and improve operational accuracy and scenario adaptability.

•Application Characteristics of Humanoid Robots:

•Industrial-level Task Simulation: Carry out skill training through highly simulated industrial scenarios (such as factory assembly lines and equipment maintenance). For example, UBtech Walker S1 undertakes tasks such as handling, sorting, and quality inspection in Geely factories. Students can control the robot to complete similar operations through programming and learn the logic of industrial robot collaborative operations.

•Complex Environment Training: Combine the multimodal perception capabilities (such as vision and touch) of humanoid robots to simulate dangerous environments (such as nuclear power plant inspection and high-altitude operations), and improve students’ emergency response capabilities in extreme conditions.

•Data-driven Skill Optimization: Use simulation platforms (such as Nvidia Isaac Sim) to generate industrial scenario data, help students analyze the rationality of robot motion trajectories, and optimize operation processes.

K12 Education: Programming and Interdisciplinary Integration

•Core Needs: Stimulate interest, cultivate logical thinking and innovative abilities, and connect with artificial intelligence basic education policies.

•Application Characteristics of Humanoid Robots:

•Programming and AI Introduction: Control humanoid robots to complete actions (such as dancing and obstacle avoidance) through graphical programming tools. For example, the Zhongqing SA01 robot supports students to customize action sequences, transforming abstract codes into intuitive behaviors.

•Interdisciplinary Project Practice: Design comprehensive tasks in combination with STEM courses, such as using robots to simulate ecosystems (biology), build simple mechanical structures (physics), analyze motion data (mathematics), etc., to achieve interdisciplinary knowledge integration.

•Emotional Interactive Teaching: Equipped with multimodal interaction models (such as voice dialogue and expression recognition), robots can serve as “AI teaching assistants” to improve the learning enthusiasm of young students through personalized feedback.

Real-world Cases

UBTech’s self-developed Yanshee robot has been deployed in thousands of schools nationwide. As an “all-rounder” for AI education, it covers core courses such as robotics, machine vision, and intelligent voice, and has become a regular in vocational college skill competitions, injecting new technological momentum into vocational education.

RobotPunk is reshaping educational scenarios with hardcore technology and an innovative ecosystem. Its product matrix—including Aelos, Kuafu, and Luban—covers the entire spectrum from K12 to higher education. The Aelos series has become a “classroom star” for AI enlightenment in primary and secondary schools through graphical programming and multimodal interaction. Kuafu and Luban cater to university research and vocational education, respectively, offering advanced training in intelligent manufacturing, bionic control, and other fields. The Aelos Embodied intelligence platform, jointly developed by RobotPunk and Digua Robot, integrates RDK X5 computing power with the TongVerse virtual simulation system, achieving a “virtual-real integration” breakthrough in teaching. It has been implemented in over 200 institutions, including those in Wuhan. By establishing school-enterprise training bases, incubating competitions, and supporting open platforms (compatible with Python/Lua programming), RobotPunk has built a closed-loop “teach-learn-compete-research” ecosystem. The company plans to cultivate tens of thousands of embodied intelligence professionals within three years, enabling cutting-edge technology to truly empower the future of education.

4.Future Trends

Trend 1: Deep Integration of Embodied Intelligence and Multimodal Large Models Drives Generalization and Personalization in Educational Scenarios

Upgraded Multimodal Interaction

Through integration of vision-language-action models, humanoid robots can dynamically adjust teaching strategies by synthesizing multi-dimensional student data—facial expressions, voice tones, body language, etc. For example, robots developed by OpenAI and Figure using Visual-Language Models (VLMs) already enable natural dialogue and task execution. In education, this will evolve to support homework tutoring, emotional perception, and personalized interaction.

Synergy of Generalization and Specialization

General large models support cross-disciplinary teaching (e.g., integrating knowledge across STEM), while vertical models optimize subject-specific education (e.g., programming, language learning). UBTech’s Walker S1, for instance, has applied swarm intelligence technology in industrial multi-task collaboration, which could migrate to educational group projects.

Trend 2: Simulation Training Platforms Accelerate Intelligent Iteration in Educational Scenarios

Virtual-Real Hybrid Training Environments

High-fidelity simulation platforms (e.g., NVIDIA Isaac Sim) allow robots to simulate teaching tasks in virtual classrooms, accumulating interaction data to optimize algorithms. Xi’an Jiaotong University and YouAIZhihe’s “One Brain, Multiple Forms” embodied model—validated in industrial scenarios—may expand to educational robot skill training.

Low-Cost Trial and Rapid Deployment

Simulations generate massive teaching scenario data (e.g., student questions, classroom emergencies), reducing real-world debugging costs. The State Grid’s “Digital Twin + AR” model, which enhanced equipment maintenance efficiency, could inspire remote teaching assistance for educational robots.

Trend 3: End-to-End Large Models Drive Autonomous Operation and Adaptive Teaching

Full-Process Intelligent Decision-Making

Large models can cover the entire education chain—from perceiving student needs to generating teaching actions. Figure 01, for example, uses end-to-end neural networks for seamless task planning and execution, which could future apply to dynamically adjusting course difficulty or real-time Q&A.

Long-Term Task Management

Combining reinforcement learning with memory mechanisms, robots can manage long-term teaching goals (e.g., semester plans) and track student progress. Google DeepMind’s RT-series models, demonstrating cross-task generalization in robot control, could further enable personalized learning path design in education.

Trend 4: Systematized Construction of Human-Robot Coexistence and Ethical Norms

Safety Design

Flexible actuators and force-control algorithms (e.g., UBTech Walker S1’s precision assembly technology) allow robots to interact physically without harming students, suitable for childcare companionship.

Privacy and Ethical Frameworks

Educational robots must comply with data privacy regulations (e.g., anonymizing student behavior records) and enhance trust through transparent algorithms. The EU’s AI ethics guidelines, for instance, may set precedents for embedding similar norms in educational robot development.

Trend 5: Transformation from Auxiliary Tools to Educational Ecosystem Reconstruction

Expanded Roles

Robots will evolve from single-function teaching aids (e.g., homework grading, knowledge explanation) to “collaborative partners” involved in curriculum design and interdisciplinary projects. India’s AI teacher Iris, currently handling basic instruction, could integrate embodied intelligence to guide experiments.

Home-School Scenario Linkage

24/7 online services via robots can bridge temporal-spatial gaps in traditional education. Virtual 动点’s robot technology, applied in home services, may extend to after-school tutoring and family education support.

Trend 6: New Roles Drive Industry Shifts, Cultivating a New Generation of Robot Education Algorithm Developers

Coupling of Morphological Design and Algorithm Development

Companies like Unitree validate platform stability through “pre-recorded motions”, while Figure AI defines hardware requirements via its end-to-end Helix model—reflecting strategic choices between hardware standardization and algorithm-driven customization.

Technological Maturity and Strategic Trade-offs

Embodied intelligence globally remains in the exploratory stage, with no significant generational gaps. Even Tesla’s Optimus does not outperform Chinese counterparts like Zhiyuan in cross-scenario generalization or unstructured environment interaction. Unitree’s focus on motion control represents a strategic balance between technical feasibility and commercial value—engineering breakthroughs in high-precision motion control enable faster scenario deployment within the current AI framework, calling for more third-party R&D empowerment.

Value Proposition of Standardized Robots

A standardized robot—provided with OS, drivers, and applications by embodied intelligence developers—can execute infinite tasks: security patrols, home care, industrial production, space exploration, and end-to-end education. These developers, whether emerging or transformed from education firms, will need hybrid tech-education capabilities:

Technical Side: Robot secondary development (e.g., programming motion trajectories, sensor data collection), AI model training (e.g., emotion recognition optimization)

Educational Side: Pedagogical expertise (e.g., vocational skill mapping, K12 curriculum standards) and scenario-specific interaction design

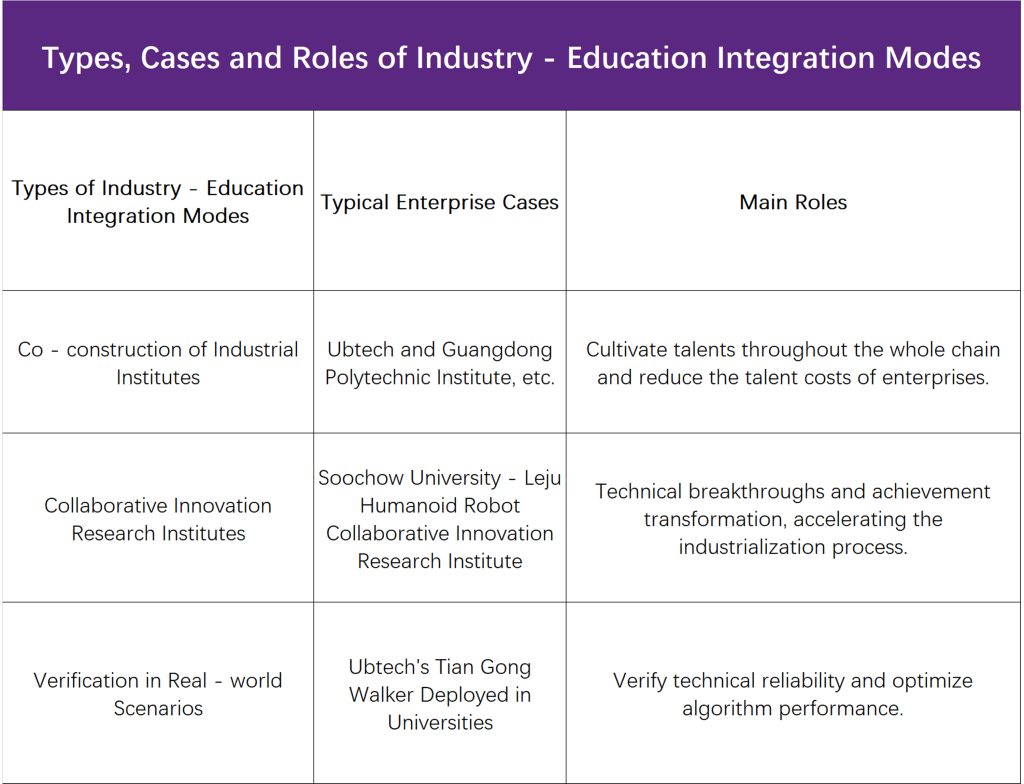

Trend 7: Integration of Industry-Education and University-Industry-Research Collaboration

Technology Transfer and Scenario Validation

Moving from labs to education, humanoid robots require real-world teaching validation. UBTech’s collaboration with universities, such as Wuhan University and Jianghan University, has deployed Skyrizon robots for high-precision research and cultural exchange. Jianghan University’s Skyrizon lite recently performed human-robot dance, exemplifying tech-humanities integration, while another university applied it to autonomous navigation—planning to expand to object grasping. Such partnerships accelerate tech adaptation to education and provide cutting-edge platforms for institutions.

Talent Cultivation and Ecosystem Building

The humanoid robot industry faces a severe talent shortage. By 2025, the gap for high-end CNC and robot professionals is projected to reach 4.5 million. To address this, universities and enterprises are co-establishing industrial colleges. UBTech, for example, has founded 5 intelligent robot industrial colleges with institutions like Guangdong University of Technology, focusing on full-chain capabilities from design to application. Through lab-training-employment ecosystems, they develop scenario-based courses in smart manufacturing and healthcare, enabling students to master frontier tech for quality employment.

Open-Source Collaboration and Technical Breakthroughs

Open-source communities and collaborative research institutes drive technical leaps. The Soochow-RobotPunk Humanoid Robot Collaborative Innovation Institute focuses on six directions: basic component R&D, control algorithms, mechanical innovation, application research, and behavioral skill digitization. Huazhong University of Science and Technology’s team, collaborating with Wuhan Granor, developed the Dazhuang power station inspection robot in 3–4 months—one month ahead of schedule—demonstrating how university-industry collaboration shortens R&D cycles and provides core tech for education, healthcare, and services.

These trends indicate that humanoid robots will evolve from educational tools to intelligent ecosystem architects, with their proliferation dependent on parallel advancements in AI, hardware, and ethics. Education may emerge as the first civilian sector to achieve large-scale human-robot collaboration.

Trend 8: Evolution of Data Labeling Technology and Its Educational Applications

Data labeling technology is evolving from traditional manual annotation to intelligent automation, and from single-modal to multi-modal intelligent labeling, providing critical data support for humanoid robot applications in educational scenarios.

Intelligent Upgrading of Labeling Technology

•Traditional data labeling relied heavily on manual labor, suffering from low efficiency and high costs. With the development of AI, intelligent labeling has become mainstream, significantly reducing annotation expenses. For example:

•NVIDIA’s active learning framework AL-Fusion automatically selects the most informative samples, cutting labeling costs by 60%.

•Meta’s self-supervised labeling technologies (Segment Anything + SAM-E) enable click-to-label functionality, supporting continuous frame annotation in videos.

•These technologies are particularly vital in education, where complex classroom environments require massive labeled data for robots to understand teaching contexts. In the training ground of Shanghai Zhiyuan Innovation Technology Co., Ltd., hundreds of robots repeat hundreds of training sessions daily. The real-world interaction data accumulated through physical engagement requires efficient labeling to become the key dataset for embodied intelligence large model evolution.

•Multi-Modal Labeling Requirements in Educational Scenarios

•Humanoid robot applications in education demand fused annotation of various modal data. Analysis of teacher-student interactions in classrooms has become a research hotspot. Educational scenarios require labeling diverse and complex data types, including:

•Visual Data: Student facial expressions, body language, object positions in classroom environments.

•Audio Data: Student questions, teacher instructions, classroom conversations.

•Motion Data: Teacher demonstration gestures, robot operation trajectories.

•Environmental Data: Classroom layouts, equipment positions, lighting conditions.

Such data requires precise annotation to support robots’ multi-modal interaction capabilities.

5.Conclusion

Despite continuous technological breakthroughs, the humanoid robot industry still faces a critical challenge—ambiguous application scenarios, which severely hinder commercialization. This has led to conservative investment across the industry chain, creating a vicious cycle where technological R&D struggles to align with practical implementation.

Although capabilities like bipedal locomotion and environmental perception continue to advance, humanoid robots have yet to identify a truly “irreplaceable” value proposition. In industrial sectors, traditional robots dominate with higher precision and lower costs, leaving humanoids struggling to compete on efficiency. In service scenarios like elderly care and education, while needs exist, core technologies such as safety standards and emotional interaction remain immature. The consumer market, meanwhile, is trapped in an awkward mismatch between functionality and price—with exorbitant costs clashing starkly against basic features like cleaning or companionship.

The greatest misconception today is expecting humanoid robots to be “all-purpose out of the box”. Just as you wouldn’t demand a freshly installed computer to run Black Myth: Wukong immediately, the real breakthrough for humanoids lies in hardware standardization + algorithmic ecosystem building. The industry’s next leap depends on this formula—and the world watches with anticipation.

Active Community Global, Inc (ACG EVENTS Global) is a leader in conference planning and production. We produce world-class conferences like summits, technical forums, awards ceremony, company visiting and so on, focusing on areas of most relevance to our served industry sectors. We are dedicated to deliver high-quality, informative and value added strategic business conferences where audience members, speakers, and sponsors can transform their business, develop key industry contacts and walk away with new resources.