The research and development of general-purpose humanoid robots has been fully accelerated by solving the problems of data and generalization

Electronic Enthusiast Network reports in the field of embodied intelligence, humanoid robots are undoubtedly the most anticipated product category, enjoying higher market popularity and greater development potential. Statistics from the New Strategy Humanoid Robot Industry Research Institute show that as of April 2025, the number of global humanoid robot body enterprises has exceeded 300, with Chinese enterprises accounting for half of the total.

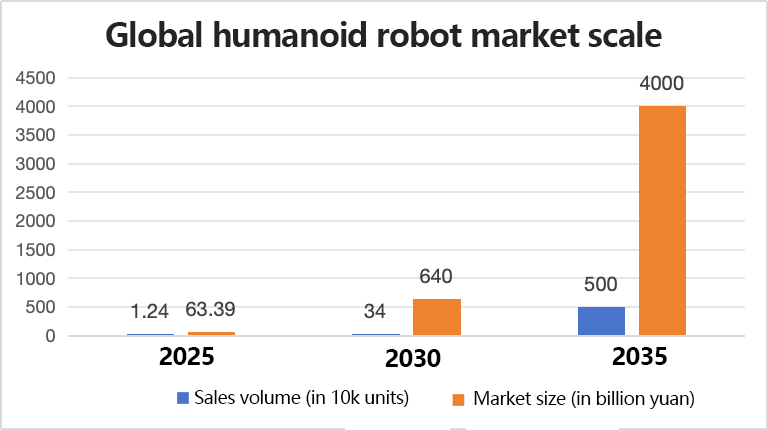

As more and more enterprises around the world enter the humanoid robot market, market expectations continue to rise. According to the “2025 Humanoid Robot Industry Development Blue Book” recently released by GGII, the global humanoid robot market sales are expected to reach 12,400 units in 2025, with a market size of 6.339 billion yuan. The sales volume will approach 340,000 units in 2030, and the market size will exceed 64 billion yuan. The sales volume is expected to exceed 5 million units by 2035, and the market size will exceed 400 billion yuan.

Data source: “2025 Humanoid Robot Industry Development Blue Book”, illustrated by Electronic Enthusiast Network

However, to unlock the market potential of humanoid robots, research and development enterprises still need to overcome many challenges. Especially at the level of data and model capabilities, the design of humanoid robots faces multi-dimensional technical bottlenecks, which not only involve the accuracy of underlying perception and decision-making but also the collaborative efficiency of complex physical systems and intelligent algorithms. For general humanoid robots, it is even more necessary to build general models that are close to or even surpass humans based on limited data. Challenges such as multi-task coupling, physical constraints, and scene generalization are particularly prominent.

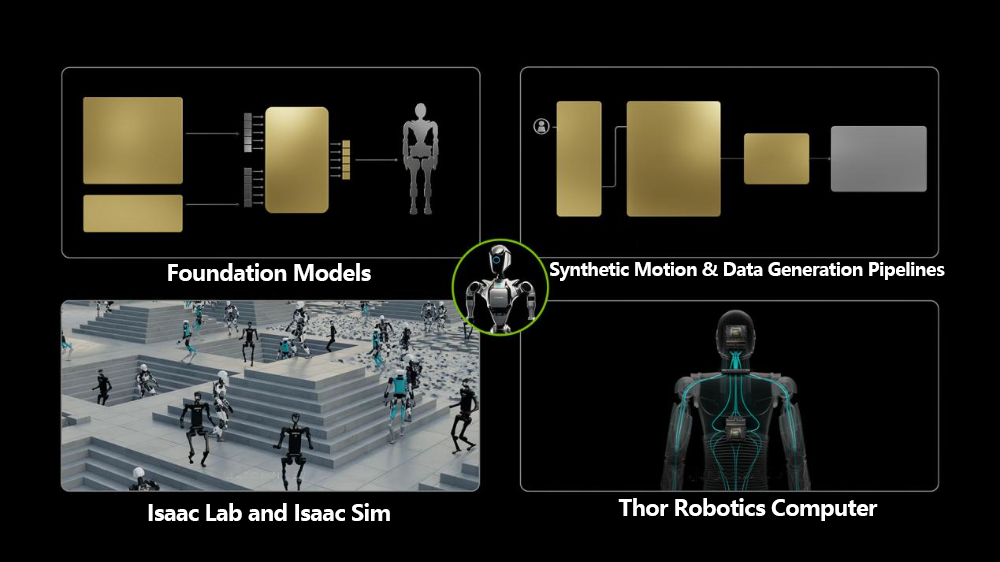

In the exploration to address the above-mentioned challenges, the open-source humanoid robot basic model Isaac GR00T N1 released by NVIDIA provides an efficient solution. Its innovations in dual-system architecture, synthetic data generation, simulation training and other aspects have significantly lowered the development threshold of general humanoid robots, marking that this field has entered a brand-new stage of development.

The dual constraints of data diversity

and scenario generalization

There is a widely recognized development law in the humanoid robot industry – “He who has the data rules the world.” This is because the intelligence of humanoid robots relies on AI large models, and the improvement of the capabilities of large models is highly dependent on the scale and quality of data. From this perspective, data can be regarded as the “soul” of humanoid robots, but the current industry is facing the pain point of data scarcity.

First of all, the data collection scenarios are complex. General-purpose humanoid robots need to adapt to various scenarios such as home, industry, and outdoors. The lighting, terrain, and object distribution in different scenarios vary significantly, and collecting data from all scenarios requires a considerable amount of time and resources. At present, most robot data only come from simple actions (such as walking and grasping) in laboratory environments, lacking real-time interaction data in complex dynamic scenarios such as material sorting in factories and home care for the elderly.

Secondly, the threshold for multimodal data collection is high. Humanoid robots need to integrate multimodal data such as vision, hearing and touch to perceive the environment. This requires multiple sensors to work synchronously and ensure temporal consistency, which is extremely technically challenging. Unlike the construction of the entire machine, the data collection stage relies on a multimodal perception system to ensure quality. In medical scenarios, flexible sensors are even more necessary, which further restricts the expansion of the data pool.

Thirdly, the difficulty and workload of data annotation are both high. To annotate humanoid robot data, one needs to master kinematics, dynamics and scene professional knowledge (for example, annotating walking postures requires knowledge such as joint angles and movement trajectories), and the annotators need to undergo professional training. Furthermore, the data volume is huge and the correlations are complex. It is necessary to annotate the interaction information of actions, postures and the environment, resulting in an exponential increase in the annotation workload.

Finally, the problems of data silos and the lack of standards are prominent. Enterprises regard data as their core competitiveness and are worried that sharing will lead to technology leakage and form “data silos”. Meanwhile, the data collection devices, methods and formats of different institutions lack unified standards. Even if open source is advocated, data integration is still fraught with difficulties.

The scarcity of data directly restricts the performance improvement of large AI models.As mentioned above, general humanoid robots need to cover complex scenarios such as homes, offices, and outdoors. Small-scale data makes it difficult to capture the diversity of these scenarios, resulting in the model being unable to learn sufficient patterns and performing poorly in new scenarios. To ensure safety, developers have to add a large number of physical constraints to the robot and embed the specifications into the control system, but this instead limits the scene generalization ability.

What is more serious than the lack of data is the problem of data quality: incorrect annotation, missing values or deviations can cause the model to learn incorrect patterns, leading to decision-making mistakes by the robot. For example, in the elderly care scenario, when training object recognition, incorrect labeling may cause the robot to take the wrong medicine, leading to serious consequences.

To break the data deadlock, the robotics industry has attempted to introduce motion capture technology – by using high-precision sensors to collect human joint trajectories and muscle force application patterns, human movements are converted into robot control instructions. This technology attempts to break down the barriers between human motion experience and robot control, but there are still limitations: High-precision motion capture systems require the deployment of a large number of devices in specific sites, which is costly; Visual motion capture is susceptible to interference from lighting and occlusion, and inertial motion capture may be affected by electromagnetic interference drift. If the basic capabilities of the AI model are insufficient, the teaching actions may introduce errors and limit their application in complex environments.

The GR00T N1 brings general skills

and reasoning to humanoid robots

During GTC 2025, NVIDIA launched a series of brand-new technologies to facilitate the development of humanoid robots, including the world’s first open-source and fully customizable basic model, NVIDIA Isaac GR00T N1. NVIDIA founder and CEO Jensen Huang said, “With NVIDIA Isaac GR00T N1 and the new data generation and robot learning framework, global robot developers will embark on a brand new chapter in the AI era.”

The GR00T N1 is the first fully customizable model for reasoning and skills of general humanoid robots launched on the NVIDIA Isaac GR00T platform, and it has two prominent advantages: The GR00T N1 is trained based on a huge humanoid dataset and innovatively adopts a dual-system architecture, which can help solve the challenges encountered in the current development of general humanoid robots and ensure the “basic performance” of general humanoid robots.

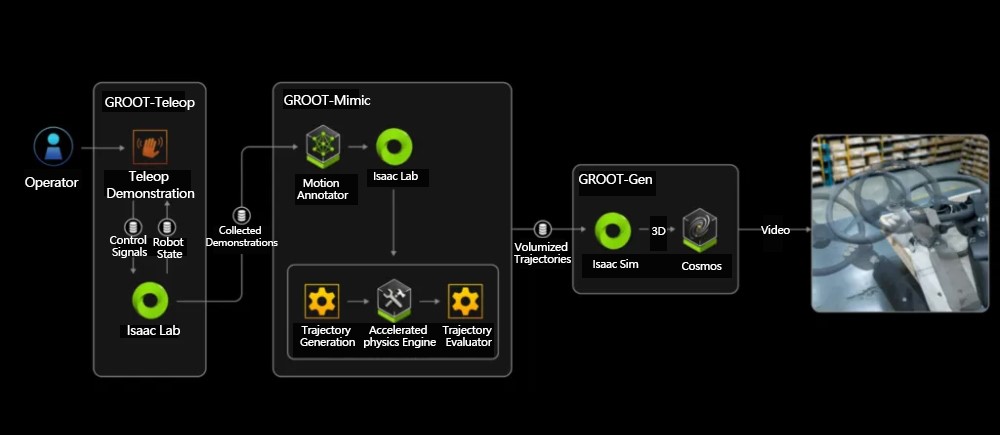

The training data of GR00T N1 includes not only the real obtained data, but also the synthetic data generated using the NVIDIA Isaac GR00T Blueprint component, as well as Internet-grade video data. Among them, the NVIDIA Isaac GR00T Blueprint used for synthetic motion generation is a reference workflow built based on NVIDIA Omniverse and NVIDIA Cosmos. It can create a large number of synthetic motion trajectories from a small number of human demonstrations for robot operations. Let’s list a set of simple data comparisons. The GR00T Blueprint can generate 780,000 synthetic trajectories within 11 hours, equivalent to 6,500 hours or nine consecutive months of human demonstration data. Meanwhile, the data generated by GR00T Blueprint can also be combined with real-world data, further improving the quality and scale of the data.

GR00T Blueprint workflow, image source: NVIDIA

How can such an attractive training dataset be obtained? NVIDIA also responded to the concerns of humanoid robot developers. At GTC 2025, the company released a large open-source dataset to assist in building the next generation of physical AI. The initial dataset contains 15 TB of data, over 320,000 robot training trajectories, and up to 1,000 common scene description (OpenUSD) resources including the SimReady resource collection, which developers can download through the Hugging Face platform. In terms of data scale, the published GR00T N1 dataset is part of a larger open-source physical AI dataset. In fact, these high-quality data can not only be used for pre-training but also for post-training to tune AI models.

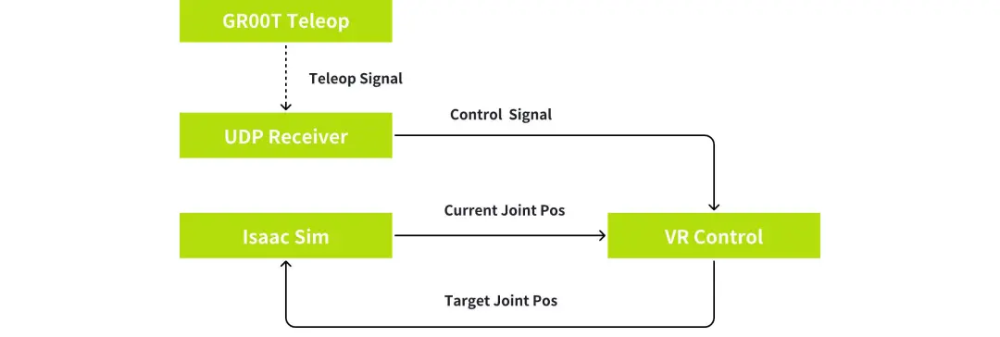

Up to now, the dataset and data synthesis methods behind the GR00T N1 are no longer just a solution, but a data ecosystem applicable to autonomous robots or autonomous driving. On the one hand, humanoid robot companies, autonomous driving solution providers and leading research institutions are actively using these data; On the other hand, these institutions and units are also building new data and methodologies based on this. For instance, Zhiyuan Robot has created a more efficient and user-friendly simulation teleoperation method by using GR00T-Teleop. GR00T-Teleop is one of the core modules of the NVIDIA Project GR00T and supports remote operation based on Apple Vision Pro. Therefore, users can control the arm, waist and chassis movements of the Isaac Sim Zhongzhiyuan AgiBot G1 robot in real time by wearing VR devices and using the handle. Then, with the help of GR00T-Mimic, the generalization from a small amount of teaching to massive data can be achieved, realizing an exponential increase in the scale of data collection. This method, along with similar ones, actually addresses the pain points and difficulties of the motion capture technology we mentioned above, enabling the construction of a “gold standard” for a large number of humanoid robot movements with a small number of human demonstrations.

Genie Sim is based on the simulation remote operation architecture of GR00T-Teleop. Image source: NVIDIA

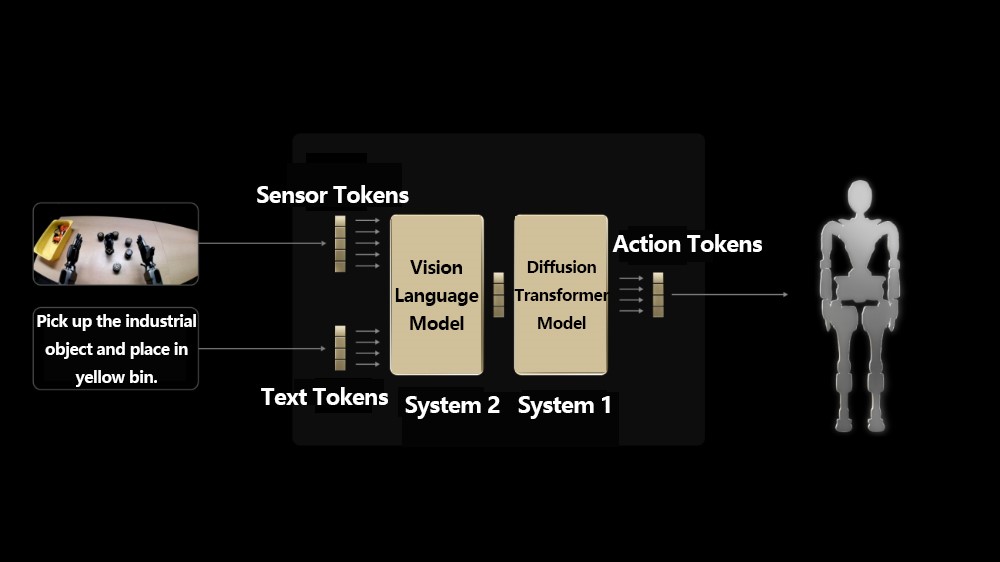

In addition to the huge data support, the GR00T N1 model also adopts a dual-system architecture to provide a cognitive mode similar to that of the human brain. As shown in the following figure, System 2 is a vision-language model based on NVIDIA-Eagle and SmolLM-1.7B. It is a methodological thinking system that interprets the environment through visual and language instructions, enabling the robot to reason about its environment and instructions and plan correct actions. System 1 is a diffusion Transformer. This action model generates continuous actions to control the movement of the robot, converting the action plan formulated by System 2 into precise and continuous robot movements. For example, in a warehouse scenario, System 1 can plan autonomous navigation paths and complete multi-step goods sorting.

The dual-system architecture of the GR00T N1 model, image source: NVIDIA

Therefore, the GR00T N1 model enables developers of general-purpose humanoid robots not to be constrained by data scale and scene generalization from the very beginning. It can easily adapt to and handle various general-purpose tasks, such as grasping and moving objects with one or both hands, or transferring items from one arm to another. Of course, it can also handle multi-step tasks that require a long background and a combination of conventional skills. A typical scenario is the handling and inspection of materials.

For specific scenarios, developers can also conduct post-training on the GR00T N1 model using real data or synthetic data to further enhance the scene adaptability of humanoid robots. For complex tasks, developers can also enhance the accuracy of humanoid robots to handle complex tasks through the open-source physics engine Newton. This engine is built on the NVIDIA Warp framework, optimized for robot learning, and compatible with simulation frameworks such as Google DeepMind MuJoCo and NVIDIA Isaac Lab.

Just as Bernt Børnich, the CEO of 1X Technologies, said, “The future development focus of humanoid robots lies in adaptability and learning ability.” The emergence of the GR00T N1 model precisely aligns with the major trend of industry development. Through efficient and high-quality datasets, pre-training, post-training and inference, the GR00T N1 model enables a comprehensive gear shift and acceleration in the research and development of humanoid robots. Currently, leading Robotics companies that give priority to using the GR00T N1 include 1X Technologies, Agility Robotics, Boston Dynamics, Mentee Robotics and NEURA Robotics, etc.

The Blackwell architecture

provides strong momentum for agents

As mentioned above, the GR00T N1 is the first model in a series of fully customizable models of NVIDIA and an important achievement of the update of the GR00T platform. In addition to the basic model and data pipeline, NVIDIA Jetson provides a scalable and powerful computing platform for the deployment of humanoid robot models.

GR00T workflow diagram, source: NVIDIA

The NVIDIA Jetson platform already has multiple chip solutions for robot deployment. For example, the Jetson Orin series provides seven modules with the same architecture for humanoid robot models of different specifications, including Jetson AGX Orin, Jetson Orin NX and Jetson Orin Nano, etc. It can provide up to 275 trillion operations per second (TOPS) of computing power, with performance eight times that of the previous generation of multimodal AI inference, and can support trained humanoid robot models to perform rapid inference.

What excites humanoid robot developers even more is the latest Jetson Thor series, which is a computing platform specifically designed for humanoid robots by NVIDIA. Jetson Thor integrates a high-performance CPU, a high-computationally powerful core, a functional safety module and a 100GB Ethernet bandwidth, enabling humanoid robots to run complex multimodal AI models, efficiently process real-time multimodal sensor data and support complex processing tasks. It is an ideal platform for the deployment of the next generation of general humanoid robots. Previously, Deepu Talla, vice President of Robotics and Edge Computing at NVIDIA, disclosed that the computing performance of the Jetson Thor platform can reach 1050 TOPS.

The outstanding performance of the Jetson Thor computing platform stems from the strong support of the NVIDIA Blackwell architecture behind it, which brings breakthrough progress to generative AI and accelerated computing. The GPU based on the Blackwell architecture features 208 billion transistors and is manufactured using a specially customized TSMC 4NP process. All NVIDIA Blackwell products adopt bare chips with double the lithography limit size and are connected into a unified GPU through 10 TB/s inter-chip interconnect technology.

In addition to the improvement in computing performance, the Blackwell architecture has a great many optimizations that can enhance the performance of computing chips based on this architecture in the humanoid robot market. For instance, the Blackwell architecture is equipped with the second-generation Transformer engine, which combines the customized NVIDIA Blackwell Tensor Core technology with the innovations of NVIDIA TensorRT-LLM and the NeMo framework. Accelerate the inference and training of large language models (LLMS) and multi-expert models (MOEs). After the release of a series of new schemes such as the GR00T N1 dataset and model, the demand for chips in pre-training, post-training and deployment inference of global general-purpose humanoid robots will increase significantly, and these tasks have different requirements for the computing power accuracy and computing power scale of computing power chips. Blackwell Tensor Core has added a new community-defined miniaturization format as a new precision option. This improvement not only enhances the accuracy of computation but also enables easy switching to a higher precision level when necessary. This capability guarantees the training and reasoning of humanoid robots.

For the second-generation Transformer engine, there is another point that is very important for humanoid robots, and that is the fine-grained scaling technology of microtensor scaling. This technology can optimize performance and accuracy, thereby enabling 4-bit floating-point (FP4) AI. This technology doubles the performance and scale of the next-generation models that memory can support while maintaining high precision unchanged. This enables humanoid robots to process multi-dimensional data such as visual recognition, natural language interaction, and force control feedback in real time, significantly enhancing their decision-making capabilities. Meanwhile, through FP4 accuracy and hardware-level decompression engines, the Blackwell architecture can reduce the power consumption of humanoid robot inference. General-purpose humanoid robots mainly rely on battery power. The limited body space makes it impossible to expand the battery capacity like in cars, so energy efficiency is a very important indicator.

In addition, Blackwell is equipped with NVIDIA’s confidential computing technology, which can protect sensitive data and AI models from unauthorized access through powerful hardware-based security, enhancing the data security features of AI agents such as humanoid robots. Therefore, the Blackwell architecture is not merely an upgrade in computing power, but also brings about a comprehensive improvement in computing power, performance, security and other aspects, promoting humanoid robots to move from the laboratory to industrial, service, special operation and other scenarios.

Conclusion

From data silos to ecological collaboration, from scene limitations to general generalization, the emergence of the NVIDIA Isaac GR00T N1 dataset and model has enabled the research and development of general humanoid robots to no longer be trapped by the lack of data, and has brought an efficient paradigm of “data synthesis + intelligent reasoning”. Only a small amount of demonstration data is needed to simulate the decision-making logic of the human brain, enabling general humanoid robots to be implemented more quickly in complex scenarios such as home services, industrial sorting, and medical care. During this process, the innovative enabling value of the Jetson AGX Thor computing platform and the Blackwell architecture has become prominent, equiping humanoid robots with high-performance “intelligent engines”.

In the future, with the deep integration of physical AI and generative AI, humanoid robots will move from “customized tools” to “universal intelligent agents”, truly integrating into every corner of human life and ushering in a brand-new era of human-robot collaboration.

Active Community Global, Inc (ACG EVENTS Global) is a leader in conference planning and production. We produce world-class conferences like summits, technical forums, awards ceremony, company visiting and so on, focusing on areas of most relevance to our served industry sectors. We are dedicated to deliver high-quality, informative and value added strategic business conferences where audience members, speakers, and sponsors can transform their business, develop key industry contacts and walk away with new resources.